Integrated Machine Learning

Why Machine Learning?

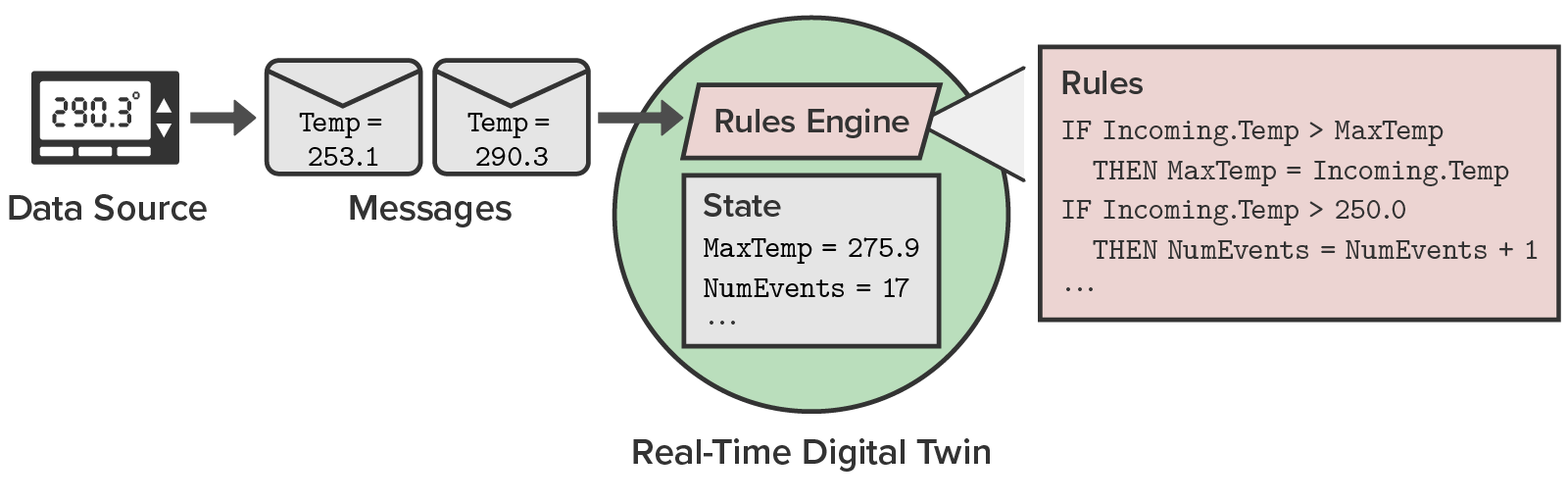

Incorporating machine learning techniques into real-time digital twins takes their power and simplicity to the next level. Creating streaming analytics code that surfaces emerging issues hidden within a stream of telemetry can be challenging. In many cases, the algorithm itself may be unknown because the underlying processes which lead to device failures are not well understood. In these cases, a machine learning (ML) algorithm can be trained to recognize abnormal telemetry patterns by feeding it thousands of historic telemetry messages that have been classified as normal or abnormal. No manual analytics coding is required. After training and testing, the ML algorithm can then be put to work monitoring incoming telemetry and alerting when it observes suspected abnormal behavior.

Adding Machine Learning to Real-Time Digital Twins

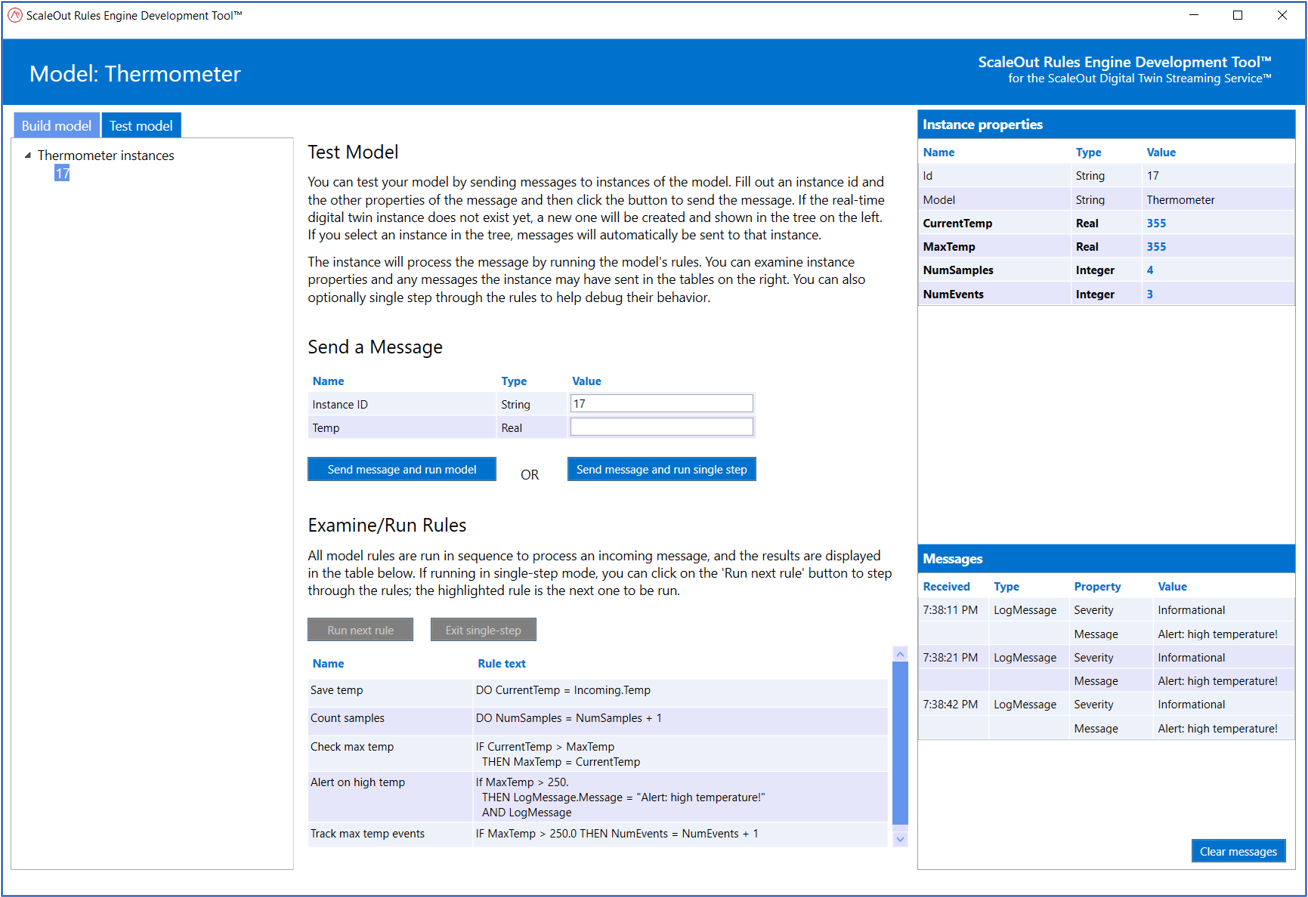

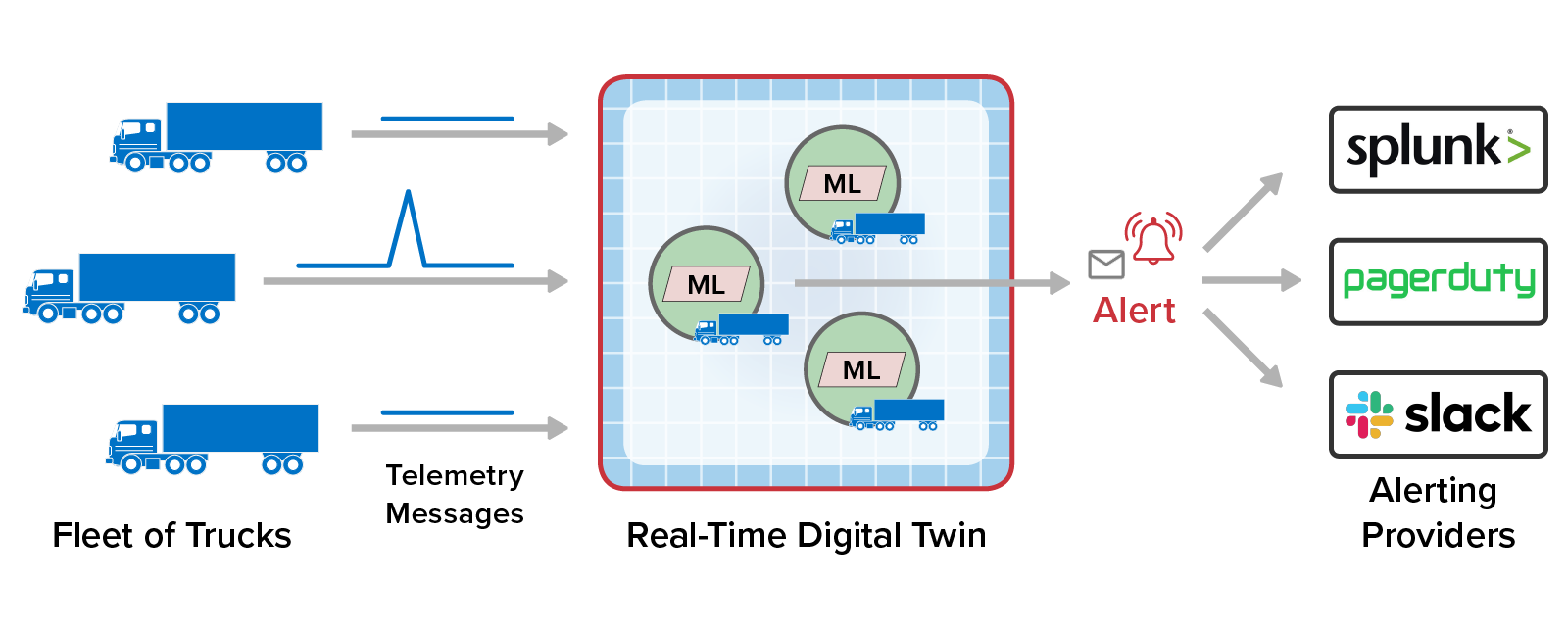

To enable machine learning (ML) within real-time digital twins, ScaleOut Software has integrated Microsoft’s popular machine learning library called ML.NET into its real-time digital twin architecture. Using the ScaleOut Model Development Tool, users can select, train, evaluate, deploy, and test ML algorithms within their real-time digital twin models. Once deployed, the selected ML algorithm runs independently for each data source, examining incoming telemetry within milliseconds after it arrives and logging abnormal events. Real-time digital twins also can be configured to generate alerts and send them to popular alerting providers, such as Splunk, Slack, and Pager Duty. In addition, business rules optionally can be used to further extend real-time analytics.

The following diagram illustrates the use of an ML algorithm to analyze engine and cargo parameters being monitored by a real-time digital twin tracking each truck in a fleet. When abnormal parameters are detected by the ML algorithm (as illustrated by the spike in the telemetry), the real-time digital twin records the incident and sends a message to the alerting provider:

Straightforward Development Workflow

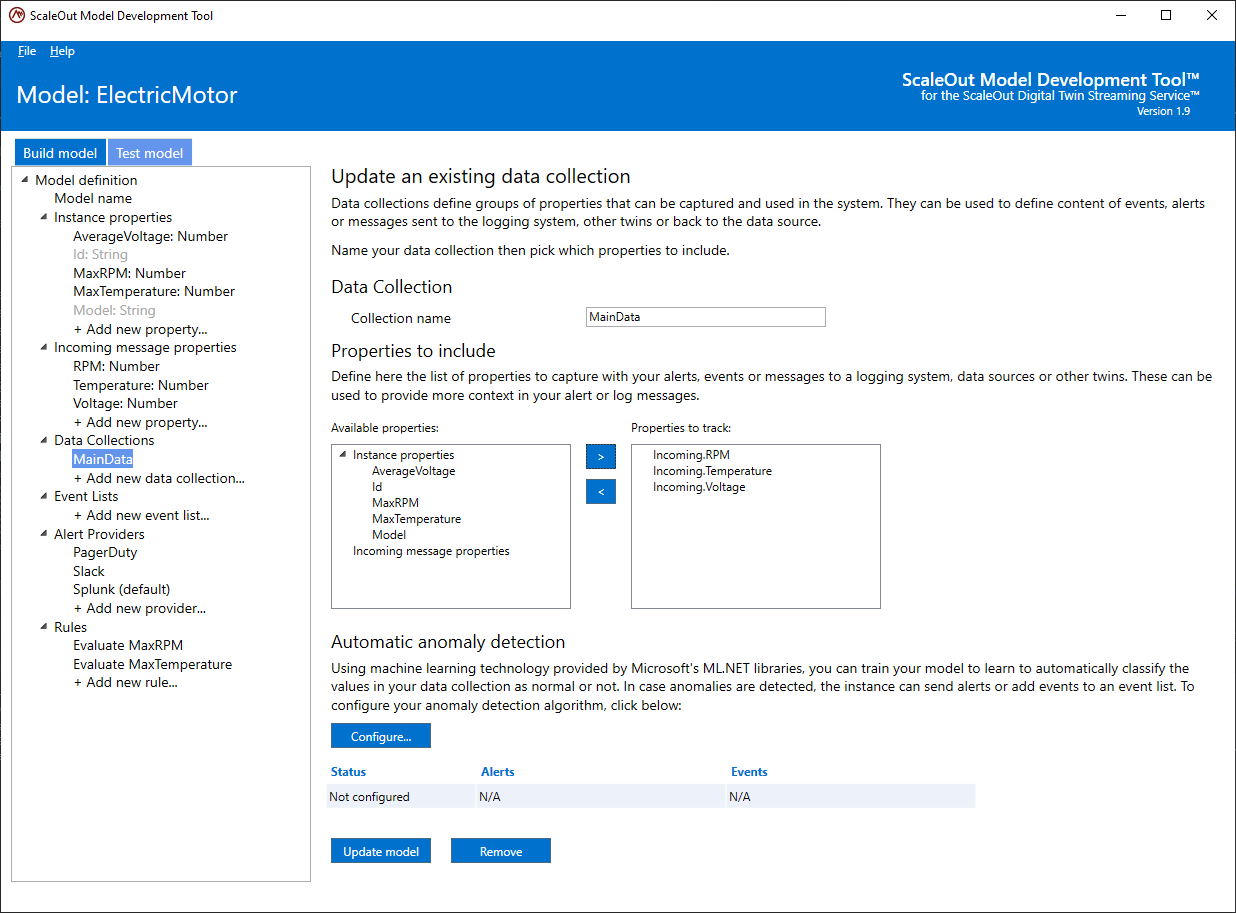

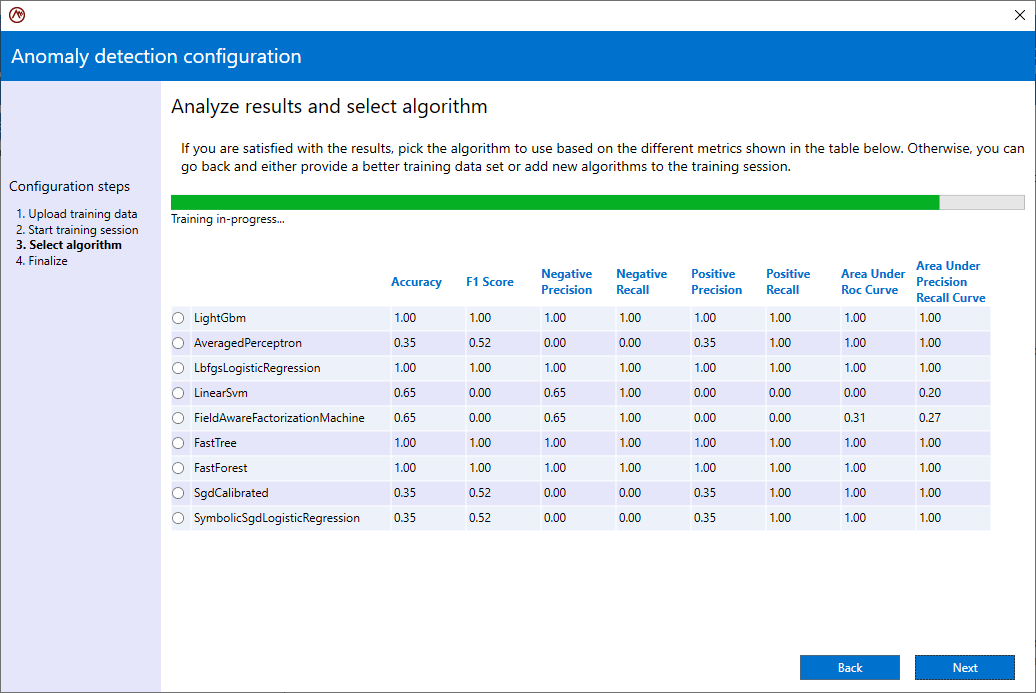

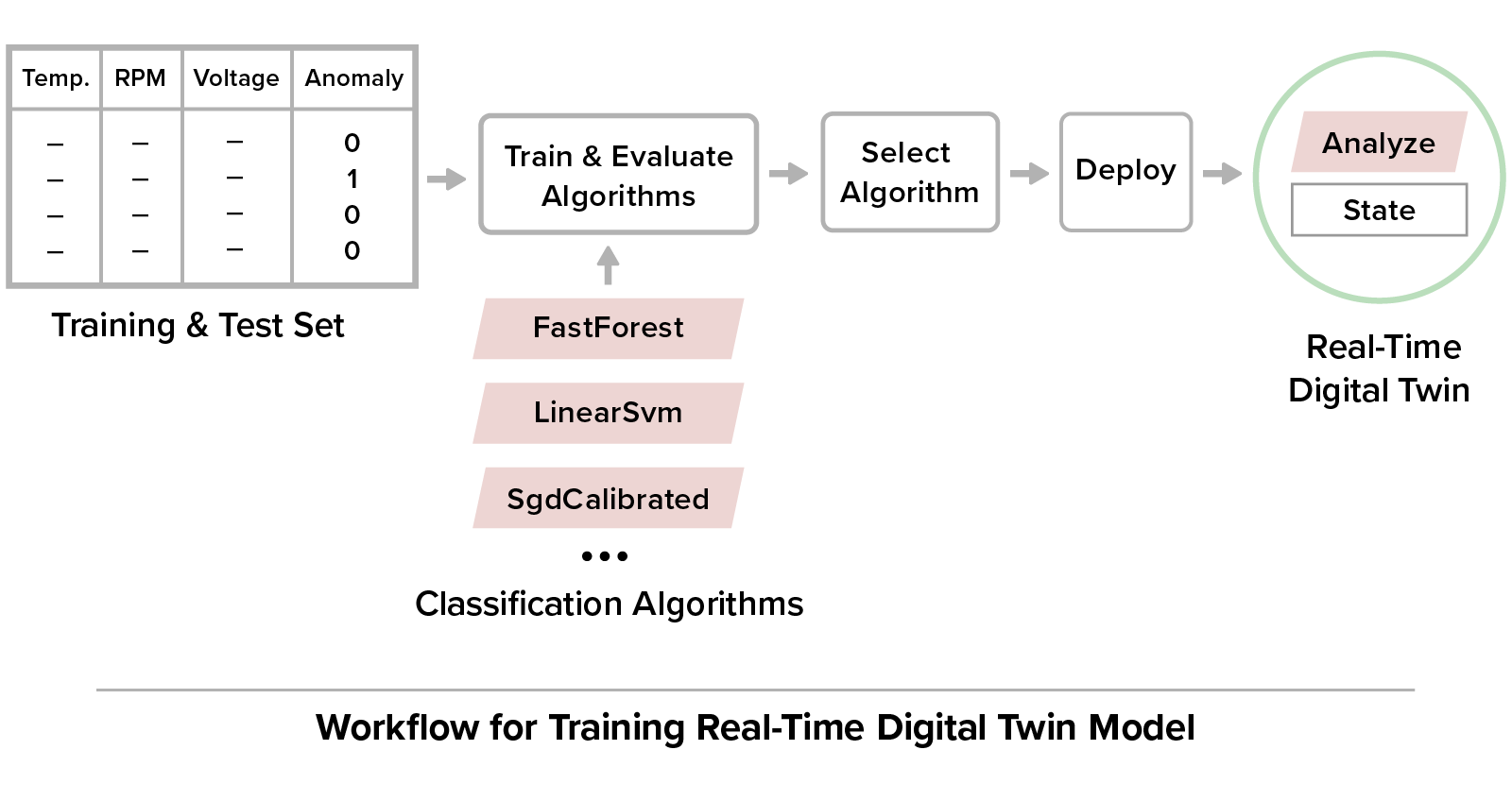

Training an ML algorithm to recognize abnormal telemetry just requires supplying a training set of historic data that have been classified as normal or abnormal. Using this training data, the ScaleOut Model Development Tool lets the user train and evaluate up to ten binary classification algorithms supplied by ML.NET using a technique called supervised learning. The user can then select the appropriate trained algorithm to deploy based on metrics for each algorithm generated during training and testing. (The algorithms are tested using a portion of the data supplied for training.)

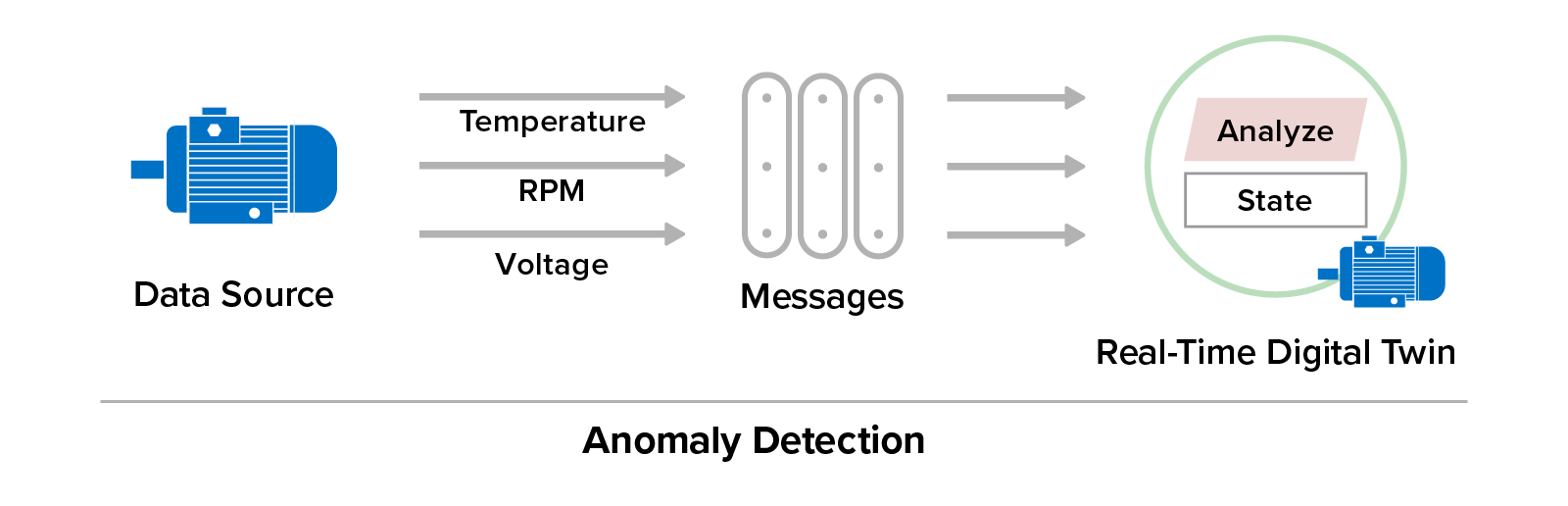

For example, consider an electric motor which periodically supplies three parameters (temperature, RPM, and voltage) to its real-time digital twin for monitoring by an ML algorithm that detects anomalies and generate alerts when they occur:

Training the real-time digital twin’s ML model follows this workflow:

Additional Support for Spike and Trend Detection

In addition to enabling multi-parameter, supervised learning for anomaly detection, the Model Development Tool provides support for detecting spikes in individual telemetry parameters. Spike detection uses an ML.NET algorithm (called an adaptive kernel density estimation algorithm) which detects rapid changes in telemetry for a single parameter.

It is also useful to detect unusual but subtle changes in a parameter’s telemetry over time. For example, if the temperature for an electric motor is expected to remain constant, it may be important to detect a slow rise in temperature that might otherwise go unobserved. To address this need, the ScaleOut Model Development Tool uses a ScaleOut-developed, linear regression algorithm to detect and report inflection points in the telemetry for selected parameters.

These two techniques for tracking changes in a telemetry parameter are illustrated below:

Automatic Event Correlation

ScaleOut StreamServer automatically correlates incoming messages from each data source for delivery to its respective real-time digital twin. This simplifies design by eliminating the need for application code to extract messages from a combined event pipeline for analysis.

State Information for Each Data Source

Each real-time digital twin maintains state information about its corresponding data source — without having to access external databases or caches. For example, ML algorithms can maintain a time-stamped list of significant events to enable additional analysis.